Shogun is split up into libshogun which contains all the machine learning algorithms, libshogunui which contains a library for the 'static interfaces', the static interfaces python, octave, matlab, r and the modular interfaces python_modular, octave_modular and r_modular (all found in the src/ subdirectory with corresponding name). See src/INSTALL on how to install shogun.

In case one wants to extend shogun the best way is to start using its library. This can be easily done as a number of examples in examples/libshogun document.

The simplest libshogun based program would be

#include <shogun/base/init.h>

using namespace shogun;

int main(int argc, char** argv)

{

init_shogun();

exit_shogun();

return 0;

}

which could be compiled with g++ -lshogun minimal.cpp -o minimal and obviously does nothing (apart from initializing and destroying a couple of global shogun objects internally).

In case one wants to redirect shoguns output functions SG_DEBUG(), SG_INFO(), SG_WARN(), SG_ERROR(), SG_PRINT() etc, one has to pass them to init_shogun() as parameters like this

void print_message(FILE* target, const char* str)

{

fprintf(target, "%s", str);

}

void print_warning(FILE* target, const char* str)

{

fprintf(target, "%s", str);

}

void print_error(FILE* target, const char* str)

{

fprintf(target, "%s", str);

}

init_shogun(&print_message, &print_warning,

&print_error);

To finally see some action one has to include the appropriate header files, e.g. we create some features and a gaussian kernel

#include <shogun/features/Labels.h>

#include <shogun/features/SimpleFeatures.h>

#include <shogun/kernel/GaussianKernel.h>

#include <shogun/classifier/svm/LibSVM.h>

#include <shogun/base/init.h>

#include <shogun/lib/common.h>

#include <shogun/io/SGIO.h>

using namespace shogun;

void print_message(FILE* target, const char* str)

{

fprintf(target, "%s", str);

}

int main(int argc, char** argv)

{

init_shogun(&print_message);

// create some data

float64_t* matrix = SG_MALLOC(float64_t, 6);

for (int32_t i=0; i<6; i++)

matrix[i]=i;

// create three 2-dimensional vectors

// shogun will now own the matrix created

CSimpleFeatures<float64_t>* features= new CSimpleFeatures<float64_t>();

features->set_feature_matrix(matrix, 2, 3);

// create three labels

CLabels* labels=new CLabels(3);

labels->set_label(0, -1);

labels->set_label(1, +1);

labels->set_label(2, -1);

// create gaussian kernel with cache 10MB, width 0.5

CGaussianKernel* kernel = new CGaussianKernel(10, 0.5);

kernel->init(features, features);

// create libsvm with C=10 and train

CLibSVM* svm = new CLibSVM(10, kernel, labels);

svm->train();

// classify on training examples

for (int32_t i=0; i<3; i++)

SG_SPRINT("output[%d]=%f\n", i, svm->apply(i));

// free up memory

SG_UNREF(svm);

exit_shogun();

return 0;

}

Now you probably wonder why this example does not leak memory. First of all, supplying pointers to arrays allocated with new[] will make shogun objects own these objects and will make them take care of cleaning them up on object destruction. Then, when creating shogun objects they keep a reference counter internally. Whenever a shogun object is returned or supplied as an argument to some function its reference counter is increased, for example in the example above

CLibSVM* svm = new CLibSVM(10, kernel, labels);

increases the reference count of kernel and labels. On destruction the reference counter is decreased and the object is freed if the counter is <= 0.

It is therefore your duty to prevent objects from destruction if you keep a handle to them globally that you still intend to use later. In the example above accessing labels after the call to SG_UNREF(svm) will cause a segmentation fault as the Label object was already destroyed in the SVM destructor. You can do this by SG_REF(obj). To decrement the reference count of an object, call SG_UNREF(obj) which will also automagically destroy it if the counter is <= 0 and set obj=NULL only in this case.

Generally, all shogun C++ Objects are prefixed with C, e.g. CSVM and derived from CSGObject. Since variables in the upper class hierarchy, need to be initialized upon construction of the object, the constructor of base class needs to be called in the constructor, e.g. CSVM calls CKernelMachine, CKernelMachine calls CClassifier which finally calls CSGObject.

For example if you implement your own SVM called MySVM you would in the constructor do

class MySVM : public CSVM

{

MySVM( ) : CSVM()

{

}

virtual ~MySVM()

{

}

};

Also make sure that you define the destructor virtual.

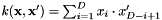

We are now going to define our own kernel, a linear like kernel defined on standard double precision floating point vectors. We define it as

where D is the dimensionality of the data. To implement this kernel we need to derive a class say CReverseLinearKernel from CSimpleKernel<float64_t> (for strings it would be CStringKernel, for sparse features CSparseKernel).

Essentially we only need to overload the CKernel::compute() function with our own implementation of compute. All the rest gets empty defaults. An example for our compute() function could be

virtual float64_t compute(int32_t idx_a, int32_t idx_b)

{

int32_t alen, blen;

bool afree, bfree;

float64_t* avec=

((CSimpleFeatures<float64_t>*) lhs)->get_feature_vector(idx_a, alen, afree);

float64_t* bvec=

((CSimpleFeatures<float64_t>*) rhs)->get_feature_vector(idx_b, blen, bfree);

ASSERT(alen==blen);

float64_t result=0;

for (int32_t i=0; i<alen; i++)

result+=avec[i]*bvec[alen-i-1];

((CSimpleFeatures<float64_t>*) lhs)->free_feature_vector(avec, idx_a, afree);

((CSimpleFeatures<float64_t>*) rhs)->free_feature_vector(bvec, idx_b, bfree);

return result;

}

So for two indices idx_a (for vector a) and idx_b (for vector b) we obtain the corresponding pointers to the feature vectors avec and bvec, do our two line computation (for loop in the middle) and ``free'' the feature vectors again. It should be noted that in most cases getting the feature vector is actually a single memory access operation (and free_feature_vector is a nop in this case). However, when preprocessor objects are attached to the feature object they could potentially perform on-the-fly processing operations.

A complete, fully working example could look like this

#include <shogun/features/SimpleFeatures.h>

#include <shogun/kernel/DotKernel.h>

#include <shogun/base/init.h>

#include <shogun/lib/common.h>

#include <shogun/io/SGIO.h>

#include <stdio.h>

using namespace shogun;

class CReverseLinearKernel : public CDotKernel

{

public:

/** default constructor */

CReverseLinearKernel() : CDotKernel(0)

{

}

/** destructor */

virtual ~CReverseLinearKernel()

{

}

/** initialize kernel

*

* @param l features of left-hand side

* @param r features of right-hand side

* @return if initializing was successful

*/

virtual bool init(CFeatures* l, CFeatures* r)

{

CDotKernel::init(l, r);

return init_normalizer();

}

/** load kernel init_data

*

* @param src file to load from

* @return if loading was successful

*/

virtual bool load_init(FILE* src)

{

return false;

}

/** save kernel init_data

*

* @param dest file to save to

* @return if saving was successful

*/

virtual bool save_init(FILE* dest)

{

return false;

}

/** return what type of kernel we are

*

* @return kernel type UNKNOWN (as it is not part

* officially part of shogun)

*/

virtual EKernelType get_kernel_type()

{

return K_UNKNOWN;

}

/** return the kernel's name

*

* @return name "Reverse Linear"

*/

inline virtual const char* get_name() const

{

return "ReverseLinear";

}

protected:

/** compute kernel function for features a and b

* idx_{a,b} denote the index of the feature vectors

* in the corresponding feature object

*

* @param idx_a index a

* @param idx_b index b

* @return computed kernel function at indices a,b

*/

virtual float64_t compute(int32_t idx_a, int32_t idx_b)

{

int32_t alen, blen;

bool afree, bfree;

float64_t* avec=

((CSimpleFeatures<float64_t>*) lhs)->get_feature_vector(idx_a, alen, afree);

float64_t* bvec=

((CSimpleFeatures<float64_t>*) rhs)->get_feature_vector(idx_b, blen, bfree);

ASSERT(alen==blen);

float64_t result=0;

for (int32_t i=0; i<alen; i++)

result+=avec[i]*bvec[alen-i-1];

((CSimpleFeatures<float64_t>*) lhs)->free_feature_vector(avec, idx_a, afree);

((CSimpleFeatures<float64_t>*) rhs)->free_feature_vector(bvec, idx_b, bfree);

return result;

}

};

void print_message(FILE* target, const char* str)

{

fprintf(target, "%s", str);

}

int main(int argc, char** argv)

{

init_shogun(&print_message);

// create some data

float64_t* matrix = SG_MALLOC(float64_t, 6);

for (int32_t i=0; i<6; i++)

matrix[i]=i;

// create three 2-dimensional vectors

// shogun will now own the matrix created

CSimpleFeatures<float64_t>* features= new CSimpleFeatures<float64_t>();

features->set_feature_matrix(matrix, 2, 3);

// create reverse linear kernel

CReverseLinearKernel* kernel = new CReverseLinearKernel();

kernel->init(features,features);

// print kernel matrix

for (int32_t i=0; i<3; i++)

{

for (int32_t j=0; j<3; j++)

SG_SPRINT("%f ", kernel->kernel(i,j));

SG_SPRINT("\n");

}

// free up memory

SG_UNREF(kernel);

exit_shogun();

return 0;

}

As you notice only a few other functions are defined returning name of the object, and object id and allow for loading/saving of kernel initialization data. No magic really, the same holds when you want to incorporate a new SVM (derive from CSVM or CLinearClassifier if it is a linear SVM) or create new feature objects (derive from CFeatures or CSimpleFeatures, CStringFeatures or CSparseFeatures). For the SVM you would only have to override the CSVM::train() function, parameter settings like epsilon, C and evaluating SVMs is done naturally by the CSVM base class.

In case you would want to integrate this into shoguns modular interfaces, all you have to do is to put this class in a header file and to include the header file in the corresponding .i file (in this case src/modular/Kernel.i). It is easiest to search for a similarly wrapped object and just fill in the same three lines: in the %{ %} block (that is ignored by swig - the program we use to generate the modular python/octave interface wrappers)

%{

#include <shogun/kernel/ReverseLinearKernel.h>

%}

then remove the C prefix (if you had one)

%rename(ReverseLinearKernel) CReverseLinearKernel;

and finally tell swig to wrap all functions found in the header

%include <shogun/kernel/ReverseLinearKernel.h>

In case you got your object working we will happily integrate it into shogun provided you follow a number of basic coding conventions detailed in README.developer (see FORMATTING for formatting instructions, MACROS on how to use and name macros, TYPES on which types to use, FUNCTIONS on how functions should look like and NAMING CONVENTIONS for the naming scheme. Note that in case you change the API in a way that breaks ABI compatibility you need to increase the major number of the libshogun soname (see Libshogun SONAME ).