|

SHOGUN

4.1.0

|

|

SHOGUN

4.1.0

|

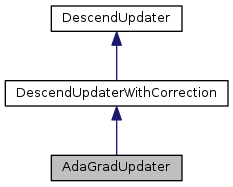

The class implements the AdaGrad method.

\[ \begin{array}{l} g_\theta={(\frac{ \partial f(\cdot) }{\partial \theta })}^2+g_\theta\\ d_\theta=\alpha\frac{1}{\sqrt{g_\theta+\epsilon}}\frac{ \partial f(\cdot) }{\partial \theta }\\ \end{array} \]

where \( \frac{ \partial f(\cdot) }{\partial \theta } \) is a negative descend direction (eg, gradient) wrt \(\theta\), \(\epsilon\) is used to avoid dividing by 0, \( \alpha \) is a build-in learning rate \(d_\theta\) is a corrected negative descend direction.

Duchi, John, Elad Hazan, and Yoram Singer. "Adaptive subgradient methods for online learning and stochastic optimization." The Journal of Machine Learning Research 12 (2011): 2121-2159.

Definition at line 56 of file AdaGradUpdater.h.

Public Member Functions | |

| AdaGradUpdater () | |

| AdaGradUpdater (float64_t learning_rate, float64_t epsilon) | |

| virtual | ~AdaGradUpdater () |

| virtual void | set_learning_rate (float64_t learning_rate) |

| virtual void | set_epsilon (float64_t epsilon) |

| virtual void | update_context (CMinimizerContext *context) |

| virtual void | load_from_context (CMinimizerContext *context) |

| virtual void | update_variable (SGVector< float64_t > variable_reference, SGVector< float64_t > raw_negative_descend_direction, float64_t learning_rate) |

| virtual void | set_descend_correction (DescendCorrection *correction) |

| virtual bool | enables_descend_correction () |

Protected Member Functions | |

| virtual float64_t | get_negative_descend_direction (float64_t variable, float64_t gradient, index_t idx, float64_t learning_rate) |

Protected Attributes | |

| float64_t | m_build_in_learning_rate |

| float64_t | m_epsilon |

| SGVector< float64_t > | m_gradient_accuracy |

| DescendCorrection * | m_correction |

| AdaGradUpdater | ( | ) |

Definition at line 36 of file AdaGradUpdater.cpp.

| AdaGradUpdater | ( | float64_t | learning_rate, |

| float64_t | epsilon | ||

| ) |

Parameterized Constructor

| learning_rate | learning_rate |

| epsilon | epsilon |

Definition at line 42 of file AdaGradUpdater.cpp.

|

virtual |

Definition at line 64 of file AdaGradUpdater.cpp.

|

virtualinherited |

Do we enable descend correction?

Definition at line 145 of file DescendUpdaterWithCorrection.h.

|

protectedvirtual |

Get the negative descend direction given current variable and gradient

It will be called at update_variable()

| variable | current variable (eg, \(\theta\)) |

| gradient | current gradient (eg, \( \frac{ \partial f(\cdot) }{\partial \theta }\)) |

| idx | the index of the variable |

| learning_rate | learning rate (for AdaGrad, learning_rate is NOT used because there is a build-in learning_rate) |

Implements DescendUpdaterWithCorrection.

Definition at line 98 of file AdaGradUpdater.cpp.

|

virtual |

Return a context object which stores mutable variables Usually it is used in serialization.

This method will be called by FirstOrderMinimizer::load_from_context(CMinimizerContext* context)

Reimplemented from DescendUpdaterWithCorrection.

Definition at line 87 of file AdaGradUpdater.cpp.

|

virtualinherited |

Set the type of descend correction

| correction | the type of descend correction |

Definition at line 135 of file DescendUpdaterWithCorrection.h.

|

virtual |

Set epsilon

| epsilon | epsilon must be positive |

Definition at line 57 of file AdaGradUpdater.cpp.

|

virtual |

Set learning rate

| learning_rate | learning rate |

Definition at line 50 of file AdaGradUpdater.cpp.

|

virtual |

Update a context object to store mutable variables

This method will be called by FirstOrderMinimizer::save_to_context()

| context | a context object |

Reimplemented from DescendUpdaterWithCorrection.

Definition at line 75 of file AdaGradUpdater.cpp.

|

virtual |

Update the target variable based on the given negative descend direction

Note that this method will update the target variable in place. This method will be called by FirstOrderMinimizer::minimize()

| variable_reference | a reference of the target variable |

| raw_negative_descend_direction | the negative descend direction given the current value |

| learning_rate | learning rate |

Reimplemented from DescendUpdaterWithCorrection.

Definition at line 108 of file AdaGradUpdater.cpp.

|

protected |

learning_rate \( \alpha \) at iteration

Definition at line 134 of file AdaGradUpdater.h.

|

protectedinherited |

descend correction object

Definition at line 165 of file DescendUpdaterWithCorrection.h.

|

protected |

\( epsilon \)

Definition at line 137 of file AdaGradUpdater.h.

\( g_\theta \)

Definition at line 140 of file AdaGradUpdater.h.