|

SHOGUN

4.1.0

|

All Classes Namespaces Files Functions Variables Typedefs Enumerations Enumerator Friends Macros Modules Pages

|

SHOGUN

4.1.0

|

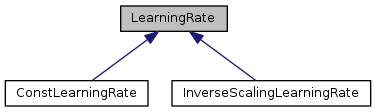

The base class about learning rate for descent-based minimizers.

This is the interface used in descent based minimizers. (eg, GradientDescendUpdater::update_variable(SGVector<float64_t> variable_reference, SGVector<float64_t> gradient) )

Definition at line 46 of file LearningRate.h.

Public Member Functions | |

| virtual float64_t | get_learning_rate (int32_t iter_counter)=0 |

| virtual void | update_context (CMinimizerContext *context)=0 |

| virtual void | load_from_context (CMinimizerContext *context)=0 |

|

pure virtual |

Get a learning rate for descent direction Note that the learning rate usually is positive

| iter_counter | the number of iterations |

Implemented in ConstLearningRate, and InverseScalingLearningRate.

|

pure virtual |

Load the given context object to restore mutable variables

| context | a context object |

Implemented in InverseScalingLearningRate, and ConstLearningRate.

|

pure virtual |

Update a context object to store mutable variables used in learning rate

| context | a context object |

Implemented in InverseScalingLearningRate, and ConstLearningRate.