|

SHOGUN

4.1.0

|

|

SHOGUN

4.1.0

|

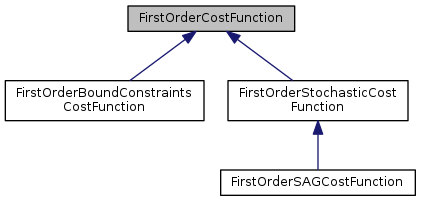

The first order cost function base class.

This class gives the interface used in a first-order gradient-based unconstrained minimizer

For example: least square cost function \(f(w)\)

\[ f(w)=\sum_i{(y_i-w^T x_i)^2} \]

where \(w\) is target variable, \(x_i\) is features of the i-th sample, and \(y_i\) is the lable of the i-th sample.

Definition at line 50 of file FirstOrderCostFunction.h.

Public Member Functions | |

| virtual float64_t | get_cost ()=0 |

| virtual SGVector< float64_t > | obtain_variable_reference ()=0 |

| virtual SGVector< float64_t > | get_gradient ()=0 |

|

pure virtual |

Get the cost given current target variables

For least square, that is the value of \(f(w)\) given \(w\) is known

This method will be called by FirstOrderMinimizer::minimize()

Implemented in FirstOrderSAGCostFunction, and FirstOrderStochasticCostFunction.

Get the gradient value wrt target variables

For least squares, that is the value of \(\frac{\partial f(w) }{\partial w}\) given \(w\) is known

This method will be called by FirstOrderMinimizer::minimize()

Implemented in FirstOrderSAGCostFunction, and FirstOrderStochasticCostFunction.

Obtain a reference of target variables Minimizers will modify target variables in place.

This method will be called by FirstOrderMinimizer::minimize()

For least squares, that is \(w\)