|

SHOGUN

4.1.0

|

|

SHOGUN

4.1.0

|

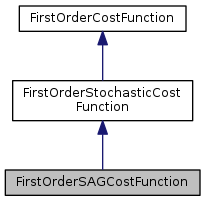

The class is about a stochastic cost function for stochastic average minimizers.

The cost function must be written as a finite sample-specific sum of cost. For example, least squares cost function,

\[ f(w)=\frac{ \sum_i^n{ (y_i-w^T x_i)^2 } }{2} \]

where \(n\) is the sample size, \((y_i,x_i)\) is the i-th sample, \(y_i\) is the label and \(x_i\) is the features

A stochastic average minimizer uses average sample gradients ( get_average_gradient() ) to reduce variance related to stochastic gradients.

Well known stochastic average methods are: SVRG, Johnson, Rie, and Tong Zhang. "Accelerating stochastic gradient descent using predictive variance reduction." Advances in Neural Information Processing Systems. 2013.

SAG, Schmidt, Mark, Nicolas Le Roux, and Francis Bach. "Minimizing finite sums with the stochastic average gradient." arXiv preprint arXiv:1309.2388 (2013).

SAGA, Defazio, Aaron, Francis Bach, and Simon Lacoste-Julien. "SAGA: A fast incremental gradient method with support for non-strongly convex composite objectives." Advances in Neural Information Processing Systems. 2014.

SDCA, Shalev-Shwartz, Shai, and Tong Zhang. "Stochastic dual coordinate ascent methods for regularized loss." The Journal of Machine Learning Research 14.1 (2013): 567-599.

Definition at line 70 of file FirstOrderSAGCostFunction.h.

Public Member Functions | |

| virtual int32_t | get_sample_size ()=0 |

| virtual SGVector< float64_t > | get_average_gradient ()=0 |

| virtual SGVector< float64_t > | get_gradient ()=0 |

| virtual float64_t | get_cost ()=0 |

| virtual void | begin_sample ()=0 |

| virtual bool | next_sample ()=0 |

| virtual SGVector< float64_t > | obtain_variable_reference ()=0 |

|

pure virtualinherited |

Initialize to generate a sample sequence

Get the AVERAGE gradient value wrt target variables

Note that the average gradient is the mean of sample gradient from get_gradient() if samples are generated (uniformly) at random.

WARNING This method returns \( \frac{\sum_i^n{ \frac{\partial f_i(w) }{\partial w} }}{n}\)

For least squares, that is the value of \( \frac{\frac{\partial f(w) }{\partial w}}{n} \) given \(w\) is known where \(f(w)=\frac{ \sum_i^n{ (y_i-w^t x_i)^2 } }{2}\)

|

pure virtual |

Get the cost given current target variables

For least squares cost function, that is the value of \(f(w)\).

Implements FirstOrderStochasticCostFunction.

Get the SAMPLE gradient value wrt target variables

WARNING This method does return \( \frac{\partial f_i(w) }{\partial w} \) instead of \(\sum_i^n{ \frac{\partial f_i(w) }{\partial w} }\)

For least squares cost function, that is the value of \(\frac{\partial f_i(w) }{\partial w}\) given \(w\) is known where the index \(i\) is obtained by next_sample()

Implements FirstOrderStochasticCostFunction.

|

pure virtual |

Get the sample size

|

pure virtualinherited |

Get next sample

Obtain a reference of target variables Minimizers will modify target variables in place.

This method will be called by FirstOrderMinimizer::minimize()

For least squares, that is \(w\)