|

SHOGUN

4.1.0

|

|

SHOGUN

4.1.0

|

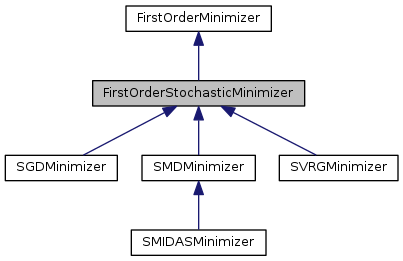

The base class for stochastic first-order gradient-based minimizers.

This class gives the interface of these stochastic minimizers.

A stochastic minimizer is used to minimize a cost function \(f(w)\) which can be written as a (finite) sum of differentiable functions, \(f_i(w)\). (eg, FirstOrderStochasticCostFunction) For example,

\[ f(w)=\sum_i{ f_i(w) } \]

Note that we call these differentiable functions \(f_i(w)\) as sample functions.

This kind of minimizers will find optimal target variables based on gradient information wrt target variables. FirstOrderStochasticMinimizer uses a sample gradient (eg, FirstOrderStochasticCostFunction::get_gradient() ) \(\frac{\partial f_i(w) }{\partial w}\) to find optimal target variables, where the index \(i\) is generated by some distribution (eg, FirstOrderStochasticCostFunction::next_sample() ).

Note that FirstOrderMinimizer uses the exact gradient, (eg, FirstOrderCostFunction::get_gradient() ), \(\frac{\partial f(w) }{\partial w}\).

For example, least sqaures cost function

\[ f(w)=\sum_i{ (y_i-w^T x_i)^2 } \]

If we let \(f_i(w)=(y_i-w^T x_i)^2 \), \(f(w)\) can be written as \(f(w)=\sum_i{ f_i(w) }\). Note that \(f_i(w)\) is a sample function for the i-th sample, \((x_i,y_i)\).

Definition at line 71 of file FirstOrderStochasticMinimizer.h.

Public Member Functions | |

| FirstOrderStochasticMinimizer () | |

| FirstOrderStochasticMinimizer (FirstOrderStochasticCostFunction *fun) | |

| virtual | ~FirstOrderStochasticMinimizer () |

| virtual bool | supports_batch_update () const |

| virtual void | set_gradient_updater (DescendUpdater *gradient_updater) |

| virtual float64_t | minimize ()=0 |

| virtual void | set_number_passes (int32_t num_passes) |

| virtual void | load_from_context (CMinimizerContext *context) |

| virtual void | set_learning_rate (LearningRate *learning_rate) |

| virtual int32_t | get_iteration_counter () |

| virtual void | set_cost_function (FirstOrderCostFunction *fun) |

| virtual CMinimizerContext * | save_to_context () |

| virtual void | set_penalty_weight (float64_t penalty_weight) |

| virtual void | set_penalty_type (Penalty *penalty_type) |

Protected Member Functions | |

| virtual void | do_proximal_operation (SGVector< float64_t >variable_reference) |

| virtual void | update_context (CMinimizerContext *context) |

| virtual void | init_minimization () |

| virtual float64_t | get_penalty (SGVector< float64_t > var) |

| virtual void | update_gradient (SGVector< float64_t > gradient, SGVector< float64_t > var) |

Protected Attributes | |

| DescendUpdater * | m_gradient_updater |

| int32_t | m_num_passes |

| int32_t | m_cur_passes |

| int32_t | m_iter_counter |

| LearningRate * | m_learning_rate |

| FirstOrderCostFunction * | m_fun |

| Penalty * | m_penalty_type |

| float64_t | m_penalty_weight |

Default constructor

Definition at line 75 of file FirstOrderStochasticMinimizer.h.

Constructor

| fun | stochastic cost function |

Definition at line 84 of file FirstOrderStochasticMinimizer.h.

|

virtual |

Destructor

Definition at line 92 of file FirstOrderStochasticMinimizer.h.

Do proximal update in place

| variable_reference | variable_reference to be updated |

Definition at line 167 of file FirstOrderStochasticMinimizer.h.

|

virtual |

How many samples/mini-batch does the minimizer use?

Definition at line 160 of file FirstOrderStochasticMinimizer.h.

Get the penalty given target variables For L2 penalty, the target variable is \(w\) and the value of penalty is \(\lambda \frac{w^t w}{2}\), where \(\lambda\) is the weight of penalty

| var | the variable used in regularization |

Definition at line 164 of file FirstOrderMinimizer.h.

|

protectedvirtual |

init the minimization process

Reimplemented in SVRGMinimizer, SMIDASMinimizer, SMDMinimizer, and SGDMinimizer.

Definition at line 203 of file FirstOrderStochasticMinimizer.h.

|

virtual |

Load the given context object to restores mutable variables Usually it is used in deserialization.

| context | a context object |

Reimplemented from FirstOrderMinimizer.

Reimplemented in SMDMinimizer, and SMIDASMinimizer.

Definition at line 136 of file FirstOrderStochasticMinimizer.h.

|

pure virtual |

Do minimization and get the optimal value

Implements FirstOrderMinimizer.

Implemented in SMIDASMinimizer, SVRGMinimizer, SGDMinimizer, and SMDMinimizer.

|

virtualinherited |

Return a context object which stores mutable variables Usually it is used in serialization.

Reimplemented in LBFGSMinimizer, and NLOPTMinimizer.

Definition at line 103 of file FirstOrderMinimizer.h.

|

virtualinherited |

Set cost function used in the minimizer

| fun | the cost function |

Definition at line 92 of file FirstOrderMinimizer.h.

|

virtual |

Set a gradient updater

| gradient_updater | the gradient_updater |

Definition at line 104 of file FirstOrderStochasticMinimizer.h.

|

virtual |

Set the learning rate of a minimizer

| learning_rate | learn rate or step size |

Definition at line 151 of file FirstOrderStochasticMinimizer.h.

|

virtual |

Set the number of times to go through all data points (samples) For example, num_passes=1 means go through all data points once.

Recall that a stochastic cost function \(f(w)\) can be written as \(\sum_i{ f_i(w) }\), where \(f_i(w)\) is the differentiable function for the i-th sample.

| num_passes | the number of times |

Definition at line 125 of file FirstOrderStochasticMinimizer.h.

|

virtualinherited |

Set the type of penalty For example, L2 penalty

| penalty_type | the type of penalty. If NULL is given, regularization is not enabled. |

Definition at line 137 of file FirstOrderMinimizer.h.

|

virtualinherited |

Set the weight of penalty

| penalty_weight | the weight of penalty, which is positive |

Definition at line 126 of file FirstOrderMinimizer.h.

|

virtual |

Does minimizer support batch update

Implements FirstOrderMinimizer.

Definition at line 98 of file FirstOrderStochasticMinimizer.h.

|

protectedvirtual |

Update a context object to store mutable variables

| context | a context object |

Reimplemented from FirstOrderMinimizer.

Reimplemented in SMDMinimizer, and SMIDASMinimizer.

Definition at line 187 of file FirstOrderStochasticMinimizer.h.

|

protectedvirtualinherited |

Add gradient of the penalty wrt target variables to unpenalized gradient For least sqaure with L2 penalty,

\[ L2f(w)=f(w) + L2(w) \]

where \( f(w)=\sum_i{(y_i-w^T x_i)^2}\) is the least sqaure cost function and \(L2(w)=\lambda \frac{w^t w}{2}\) is the L2 penalty

Target variables is \(w\) Unpenalized gradient is \(\frac{\partial f(w) }{\partial w}\) Gradient of the penalty wrt target variables is \(\frac{\partial L2(w) }{\partial w}\)

| gradient | unpenalized gradient wrt its target variable |

| var | the target variable |

Definition at line 190 of file FirstOrderMinimizer.h.

|

protected |

current iteration to go through data

Definition at line 215 of file FirstOrderStochasticMinimizer.h.

|

protectedinherited |

Cost function

Definition at line 205 of file FirstOrderMinimizer.h.

|

protected |

the gradient update step

Definition at line 200 of file FirstOrderStochasticMinimizer.h.

|

protected |

number of used samples/mini-batches

Definition at line 218 of file FirstOrderStochasticMinimizer.h.

|

protected |

learning_rate object

Definition at line 221 of file FirstOrderStochasticMinimizer.h.

|

protected |

iteration to go through data

Definition at line 212 of file FirstOrderStochasticMinimizer.h.

|

protectedinherited |

the type of penalty

Definition at line 208 of file FirstOrderMinimizer.h.

|

protectedinherited |

the weight of penalty

Definition at line 211 of file FirstOrderMinimizer.h.